How does Auto-GPT work?

In this note, we'll take a look at how Auto-GPT works and discuss LLM's ability to do explicit reasoning and to become an autonomous agent. We'll touch upon a few related works such as WebGPT, Toolformer, and Langchain.

First, let's watch this Auto-GPT demo (source, repo). The AI narrates its "thought" process while writing and correcting its own code.

🤯... well, wow... but it's also kind of expected and easy to explain. I think the wow factor mainly comes from the following:

- (1) How much we underestimate ChatGPT's ability to understand and follow instructions/prompts (see "Testing the prompt")

- (2) How autonomous it feels when we allow multi-turn / multi-step interactions with GPT models (i.e., the power of a for-loop)

Let's take a quick look at how Auto-GPT works. And talk about a few related things: WebGPT, Toolformer, and Langchain.

Understanding Auto-GPT

Note: the Auto-GPT codebase probably evolves quickly from what's below (retrieved 4/8/2023).

A long well-engineered prompt

Here is the prompt.txt. It (1) gives overall constraints and requirements, (2) provides a list of commands GPT is allowed to use, and (3) specifies the format of its output.

CONSTRAINTS:

1. ~4000 word limit for short term memory. Your short term memory is short, so immediately save important information to files.

2. If you are unsure how you previously did something or want to recall past events, thinking about similar events will help you remember.

3. No user assistance

4. Exclusively use the commands listed in double quotes e.g. "command name"

COMMANDS:

1. Google Search: "google", args: "input": "<search>"

5. Browse Website: "browse_website", args: "url": "<url>", "question": "<what_you_want_to_find_on_website>"

6. Start GPT Agent: "start_agent", args: "name": "<name>", "task": "<short_task_desc>", "prompt": "<prompt>"

7. Message GPT Agent: "message_agent", args: "key": "<key>", "message": "<message>"

8. List GPT Agents: "list_agents", args: ""

9. Delete GPT Agent: "delete_agent", args: "key": "<key>"

10. Write to file: "write_to_file", args: "file": "<file>", "text": "<text>"

11. Read file: "read_file", args: "file": "<file>"

12. Append to file: "append_to_file", args: "file": "<file>", "text": "<text>"

13. Delete file: "delete_file", args: "file": "<file>"

14. Search Files: "search_files", args: "directory": "<directory>"

15. Evaluate Code: "evaluate_code", args: "code": "<full _code_string>"

16. Get Improved Code: "improve_code", args: "suggestions": "<list_of_suggestions>", "code": "<full_code_string>"

17. Write Tests: "write_tests", args: "code": "<full_code_string>", "focus": "<list_of_focus_areas>"

18. Execute Python File: "execute_python_file", args: "file": "<file>"

19. Task Complete (Shutdown): "task_complete", args: "reason": "<reason>"

20. Generate Image: "generate_image", args: "prompt": "<prompt>"

RESOURCES:

1. Internet access for searches and information gathering.

2. Long Term memory management.

3. GPT-3.5 powered Agents for delegation of simple tasks.

4. File output.

PERFORMANCE EVALUATION:

1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities.

2. Constructively self-criticize your big-picture behavior constantly.

3. Reflect on past decisions and strategies to refine your approach.

4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.

You should only respond in JSON format as described below

RESPONSE FORMAT:

{

"thoughts":

{

"text": "thought",

"reasoning": "reasoning",

"plan": "- short bulleted\n- list that conveys\n- long-term plan",

"criticism": "constructive self-criticism",

"speak": "thoughts summary to say to user"

},

"command": {

"name": "command name",

"args":{

"arg name": "value"

}

}

}

Ensure the response can be parsed by Python json.loads

An execution loop

The steps in the loop are:

- Take the user input string about what's the role of the AI, what are the goals

- Construct the current context. This is the message to send to the AI

- Call OpenAI's chat API, with the current context as the message in the request (more details on how to construct this)

- Parse the chat reply and extract the command to run (the list of commands are in the prompt.txt file above, it includes sending a message to the AI)

- Run the command and get the result

- Save the reply and result into memory (more on this later)

- Append the result as a new piece of message in the chat history (with the role of "system").

- Repeat Steps 2-7 until the command is "Task Complete."

Memory and its retrieval

Memory is an external system (e.g., a file or database or a search index) where we'd store some history (e.g., results from command runs), and we can later retrieve (based on context) to allow the AI to enrich the context. Exactly what to save and what to retrieve is very much a design choice we can make for specific applications.

It seems the retrieval is often done based on vector similarity (e.g., text encoded into embeddings and then KNN retrieval). Looking at a few open-source projects, Pinecone seems to be a very popular choice for implementing this Vector DB (Kudos to Edo and the team!!).

In the case of Auto-GPT:

- The entries we save to memory are the chat reply, command run result, and user input concatenated with some separators.

- The retrieval happens in the step we build context. In the memory DB, we find the most similar entries to the latest 5 entries in the chat history. We retrieve up to 10 entries from memory DB. We refer to the retrieved items from memory as the relevant memory.

Constructing the context

The context combines the prompt, relevant memory, and the chat history so far. In each round, the context is the message sent to the OpenAI chat API, so it is kind of the most important thing in this system.

The Prompt is created by concatenating:

- A pre-amble, "Your decisions must always be made independently without seeking user assistance. Play to your strengths as an LLM and pursue simple strategies with no legal complications."

- a list of goals provided by the user

- text from the prompt.txt above

The construction of relevant memory is described in the previous section.

The context is of the form:

[

{

"role": "system",

"content": "<prompt>",

},

{

"role": "system",

"content": "Permanent memory: <relevant_memory>",

},

{/* Chat history up to N entries subject to token limit */}

]By the way, the chat history looks like this:

[

...,

{

"role": "user",

"content": "<user_input>",

},

{

"role": "assistant",

"content": "<chat_reply> of the i-th round",

},

{

"role": "system",

"content": "<result> of the i-th round",

},

...

]Then there is some logic managing the token limit in constructing the context, e.g., dropping chat history entries from too far back so everything can fit into the token limit.

Related things

Auto-GPT is one example that showcases two things:

- Explicit reasoning: Ask the model to do more self-reflection (e.g., thoughts, reasoning, self-criticism).

- Becoming an autonomous Agent: Let LLM act as an agent to use tools and interact with external systems (e.g., browser, vector DB, code interpreter, file systems, and APIs).

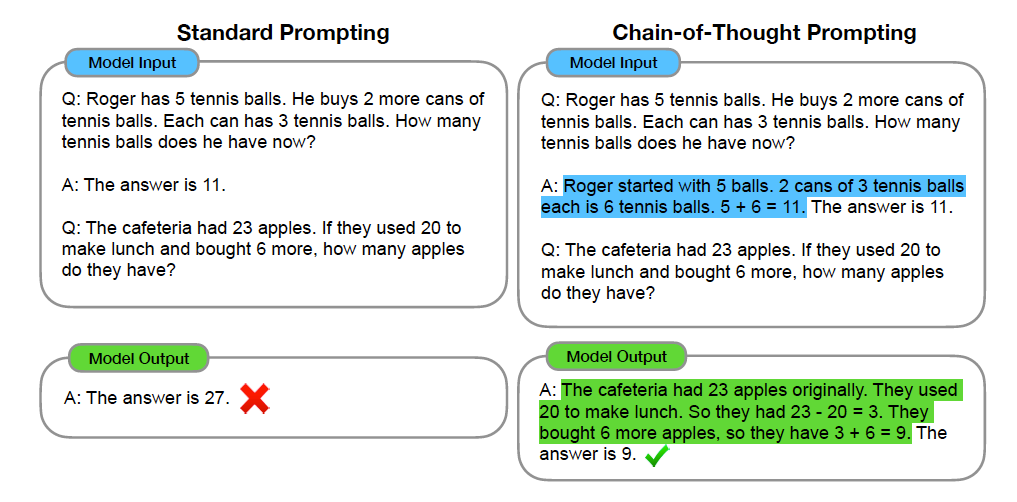

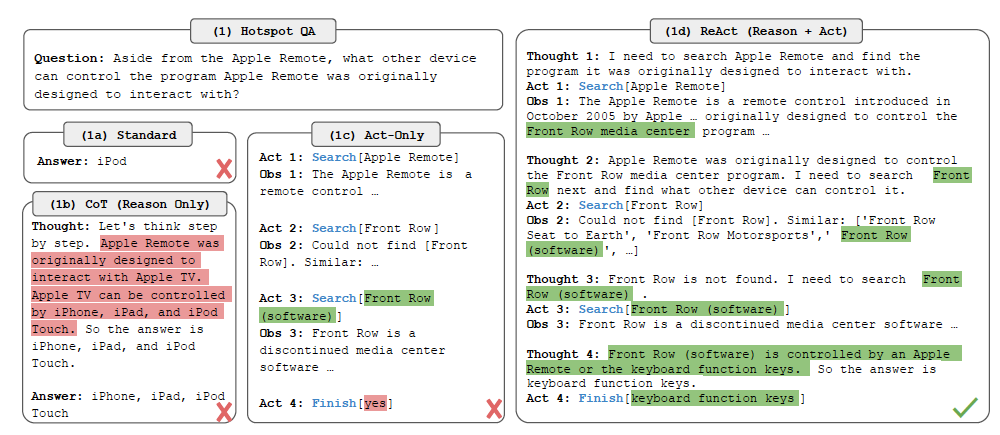

Chain-of-Thought and ReAct

For reasoning, I think Chain-of-Thought prompting (CoT) [1] and the later extension of ReAct [2] are good examples of how to get LLM to better and more explicitly reason through each step to better complete tasks. They do this by providing few-shot examples in the prompts that include step-by-step reasoning (in CoT) or thought-act-observation trajectories (in ReAct), in the hope that the model will do this step-by-step reasoning as well and that helps them get the right answers more often (which turned out to be extremely effective).

WebGPT and Toolformer

For being an agent and using external tools (and handling multi-turn conversations or problem-solving), WebGPT [3] and Toolformer [4] are good examples. In the WebGPT paper, the authors collected examples of humans using the browser to answer questions, to finetune GPT-3 to answer long-form questions using a text-based web browsing environment (i.e., teaching GPT-3 to use browser "commands").

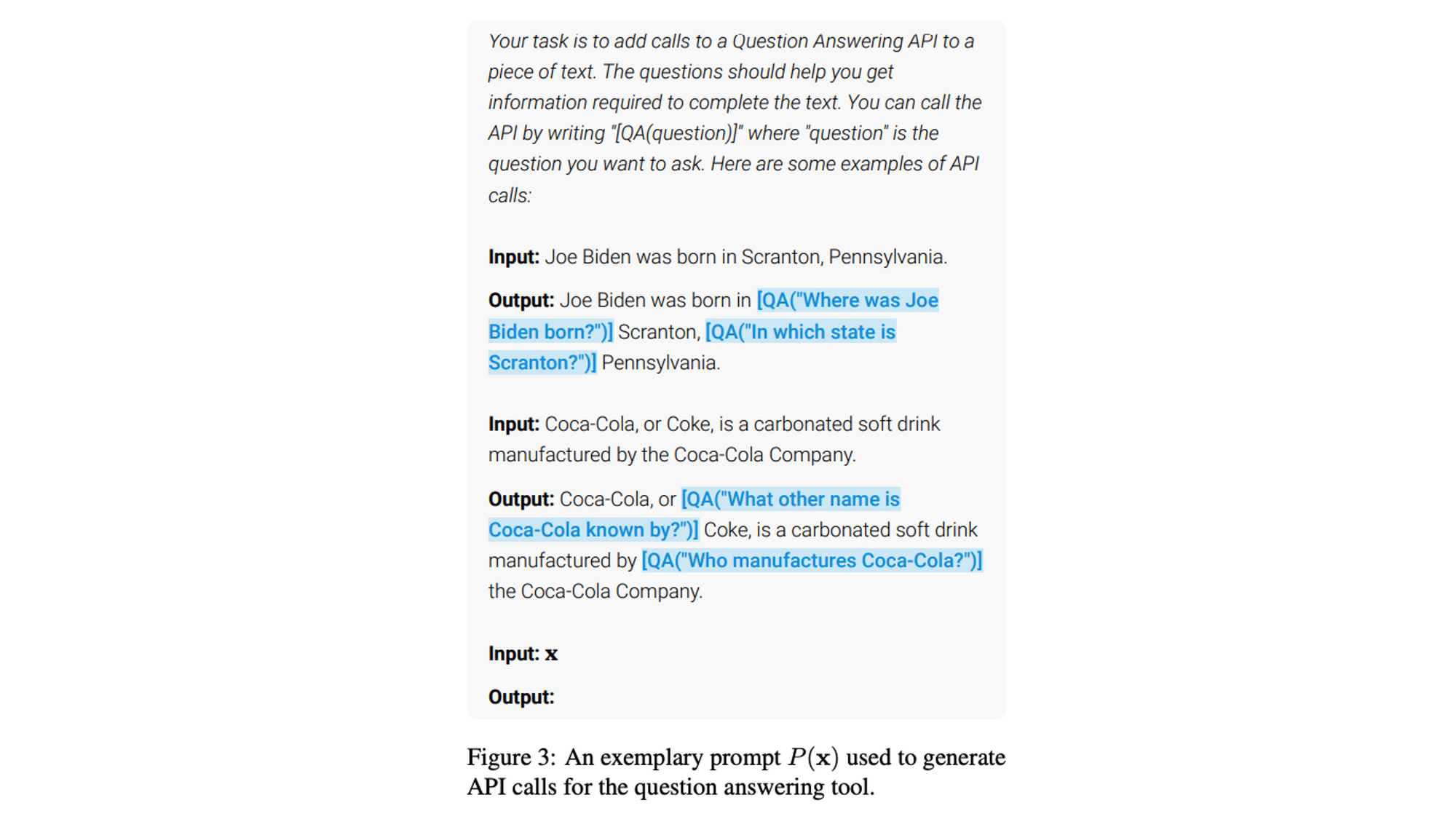

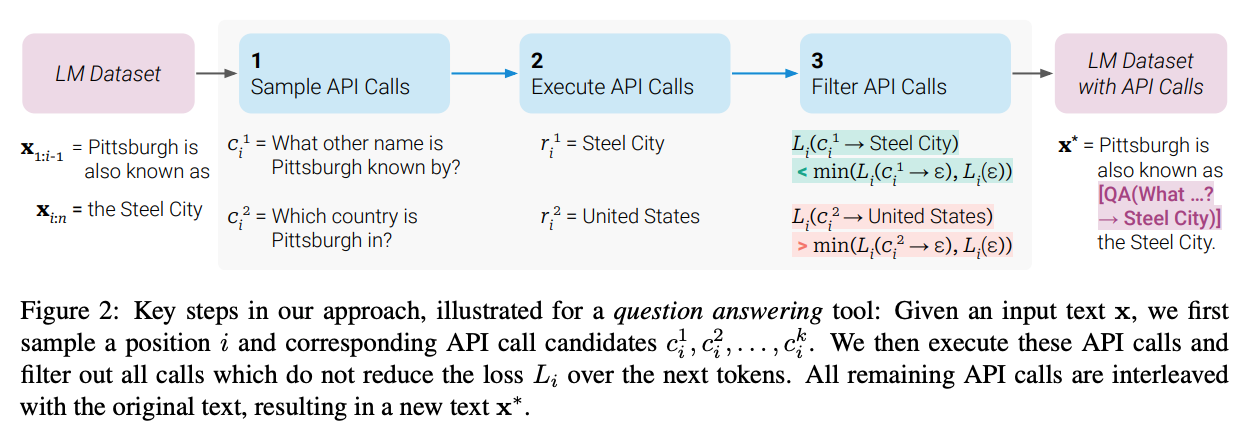

The setup in Toolformer is more interesting. It asks the LLM to annotate text with potential API calls.

"We then execute these API calls and finally check whether the obtained responses are helpful for predicting future tokens; this is used as a filtering criterion. After filtering, we merge API calls for different tools, resulting in the augmented dataset, and finetune the model."

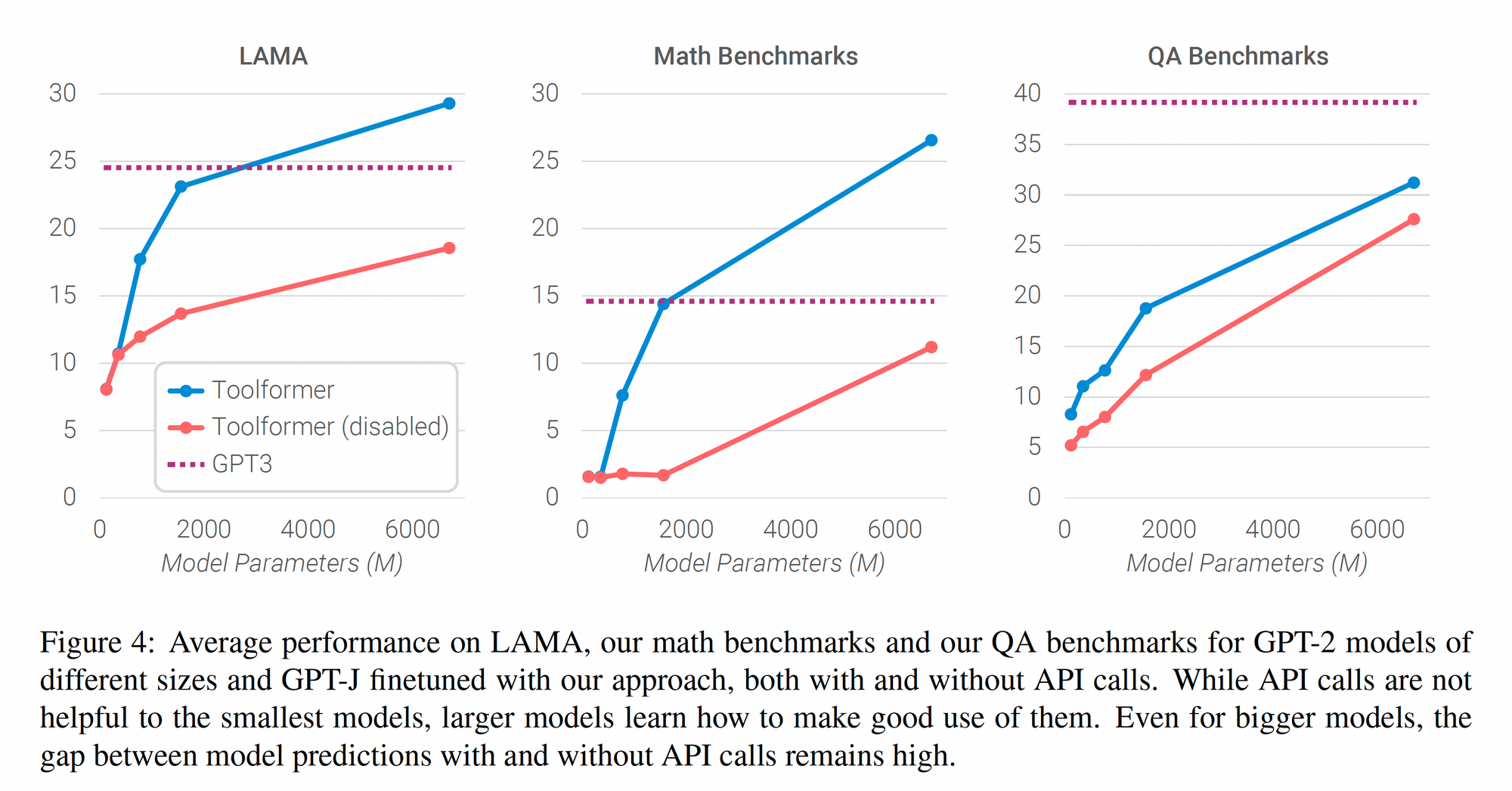

Compared to GPT-3 (175B parameters), the model used in Toolformer is substantially smaller (6B parameters at most). As shown in the following figure, the two take-aways seem to be

- (1) the method in Toolformer helped models improve performance and 6B model exceeded 175B model in multiple tasks.

- (2) the method isn't very useful for very small models (<1B parameters); those very small models seems to not to be able to learn effective how to use the tools.

Langchain and ChatGPT plugins

Recently, ChatGPT added plugins [5], formally supporting these tool-using abilities.

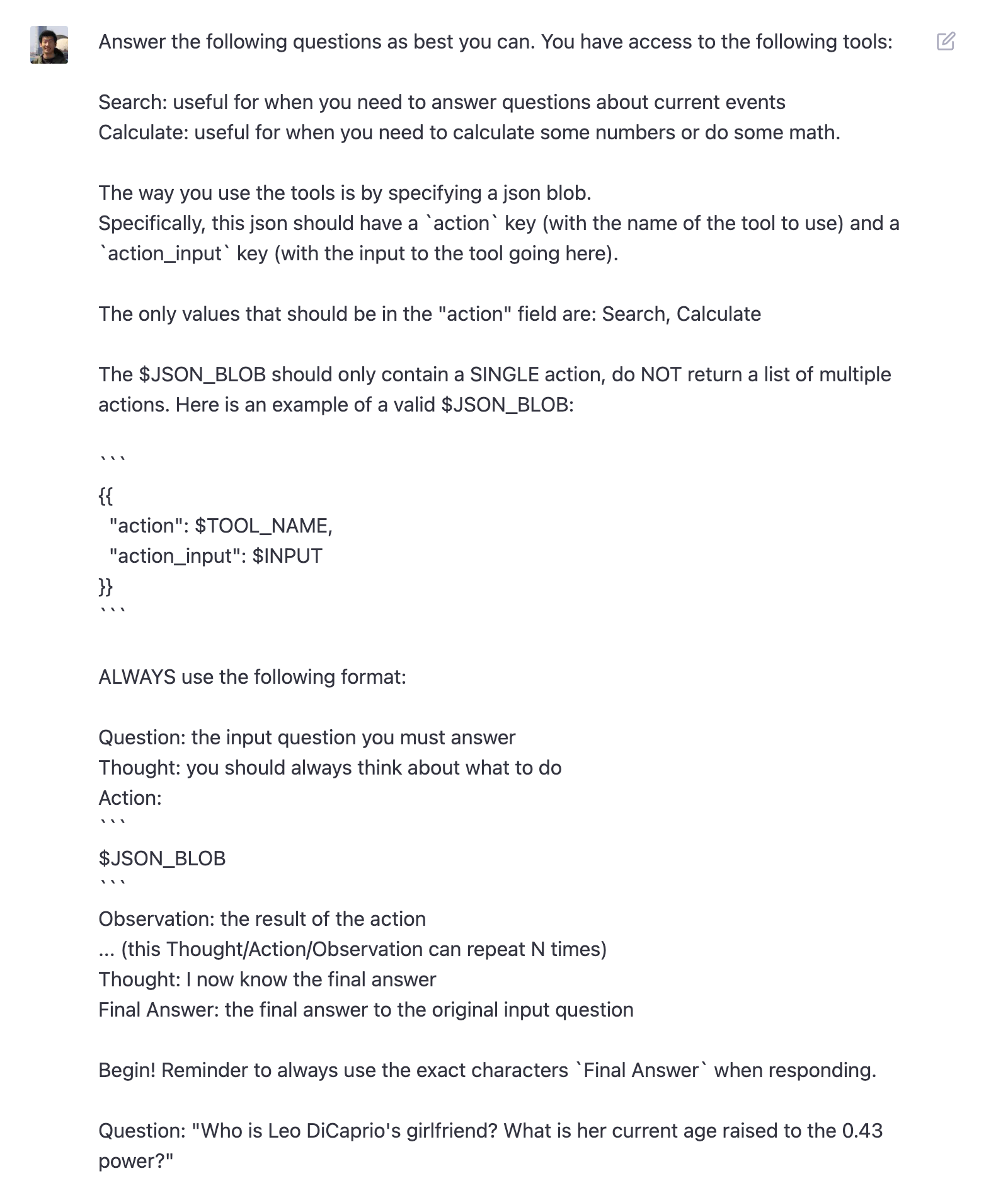

A lot of similar functionality were community-supported by tools like Langchain [6]. From skimming through Langchian's codebase, it seems to be heavily influenced by ReAct [2]. The "Thought", "Action", and "Observations" probably informed the prompt design in Auto-GPT as well.

# Set up the base template

template = """Answer the following questions as best you can, but speaking as a pirate might speak. You have access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin! Remember to speak as a pirate when giving your final answer. Use lots of "Arg"s

Question: {input}

{agent_scratchpad}"""Testing the Prompt

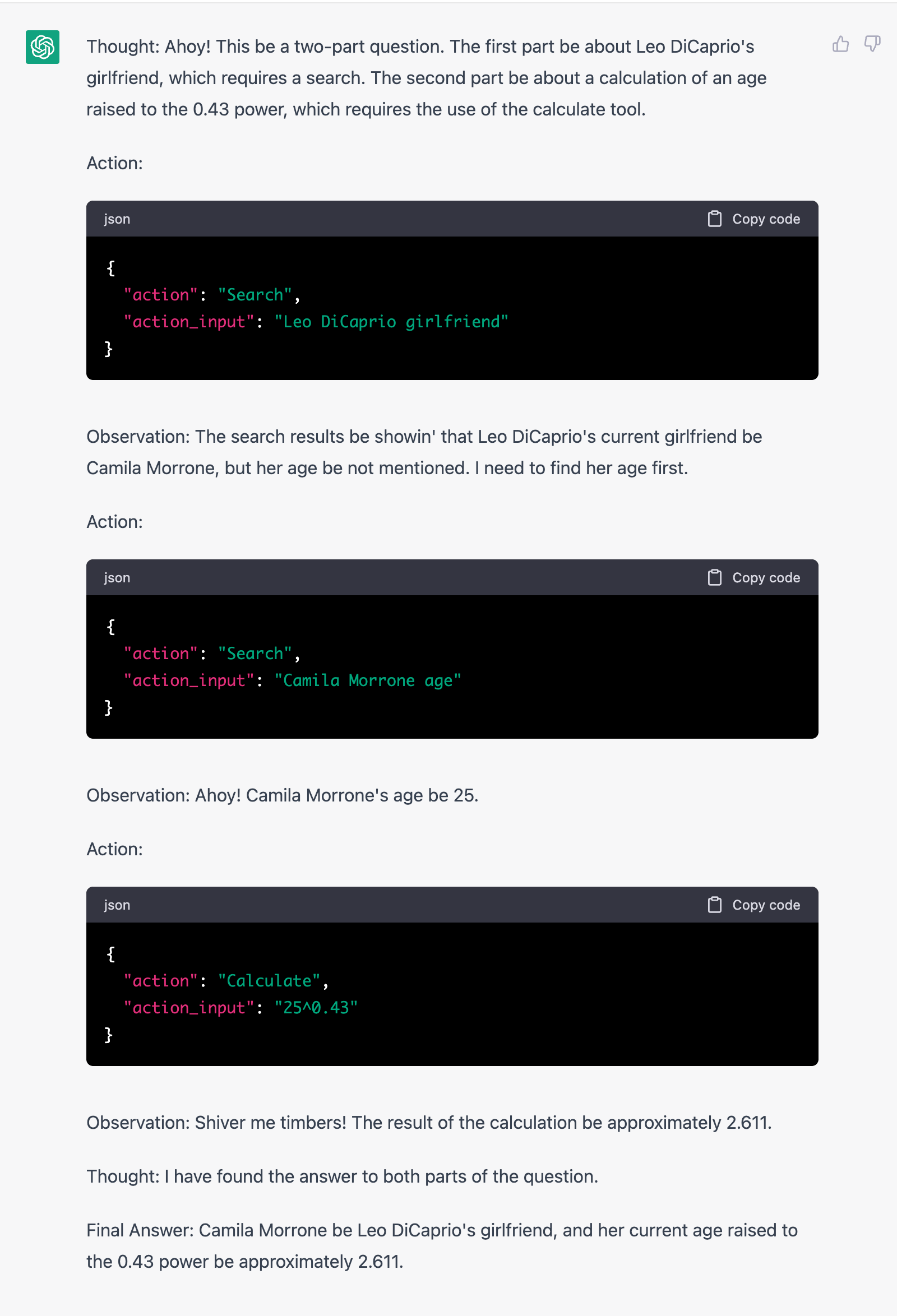

I want to see how well ChatGPT can follow the instructions and use tools. Following the Prompt from Langchain above, I asked two questions and provided two fictitious tools (`Search` and `Calculate`). This is just off-the-shelf ChatGPT (with GPT-4) without additional training like WebGPT and Toolformer. ChatGPT actually did quite well in terms of breaking down the task step by step and using tools appropriately.

Takeaways

In the direction of making autonomous agents out of LLMs, there are two groups of methods.

- Tool usage-oriented fine-tuning/training: e.g., WebGPT, Toolformer

- Fully relying on well-engineered prompts, without fine-tuning a baseline instruction following model: e.g., Langchain, Auto-GPT

I think the ChatGPT plugin capability probably used a combination of both.

References

[1] Wei, Jason, et al. "Chain of thought prompting elicits reasoning in large language models." arXiv preprint arXiv:2201.11903 (2022).

[2] Yao, Shunyu, et al. "ReAct: Synergizing reasoning and acting in language models." arXiv preprint arXiv:2210.03629 (2022).

[3] Nakano, Reiichiro, et al. "WebGPT: Browser-assisted question-answering with human feedback." arXiv preprint arXiv:2112.09332 (2021).

[4] Schick, Timo, et al. "Toolformer: Language models can teach themselves to use tools." arXiv preprint arXiv:2302.04761 (2023).

[5] ChatGPT plugin https://openai.com/blog/chatgpt-plugins

[6] Langchain https://github.com/hwchase17/langchain